Hello, this simple script is an update for another one I wrote eons ago.

Download the script <- seq2fas.pl

NOTE: It depends on the UNIX commands "pr","sed","tr" and "fold" to work out ;)

The functionality is very simple, it receives a file like this:

ACCTTACGCC

AGTAACGTAG

TTAGTATATA

ACCTACGATA

AAACAGGCCC

ACCGCTAGAT

AGCCCCATCC

CCGGTATACC

AGCGGACCCC

AACAACCCCC

>Random_seq-1

ACCTTACGCC

>Random_seq-2

AGTAACGTAG

>Random_seq-3

TTAGTATATA

>Random_seq-4

ACCTACGATA

>Random_seq-5

AAACAGGCCC

>Random_seq-6

ACCGCTAGAT

>Random_seq-7

AGCCCCATCC

>Random_seq-8

CCGGTATACC

>Random_seq-9

AGCGGACCCC

>Random_seq-10

AACAACCCCC

Download the script <- seq2fas.pl

NOTE: It depends on the UNIX commands "pr","sed","tr" and "fold" to work out ;)

The functionality is very simple, it receives a file like this:

ACCTTACGCC

AGTAACGTAG

TTAGTATATA

ACCTACGATA

AAACAGGCCC

ACCGCTAGAT

AGCCCCATCC

CCGGTATACC

AGCGGACCCC

AACAACCCCC

And prints the output in FASTA format:

ACCTTACGCC

>Random_seq-2

AGTAACGTAG

>Random_seq-3

TTAGTATATA

>Random_seq-4

ACCTACGATA

>Random_seq-5

AAACAGGCCC

>Random_seq-6

ACCGCTAGAT

>Random_seq-7

AGCCCCATCC

>Random_seq-8

CCGGTATACC

>Random_seq-9

AGCGGACCCC

>Random_seq-10

AACAACCCCC

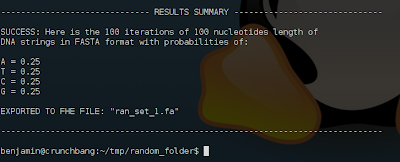

This script is very useful if you are working with artificial sets produced in R, Perl, etc.

To run the script, just download it, change its permission to be executable and run it:

$ chmod +x seq2fas.pl

$ perl seq2fas.pl

Here is the USAGE:

perl seq2fas.pl randomDNAsequences.dna random_seqs 60 randomSeqs.fas

CODE:

#!/usr/bin/perl

################################################################################

# seq2fas.pl

# This script takes an input file like this:

#

# ACCTTACGCC

# AGTAACGTAG

# TTAGTATATA

# ACCTACGATA

# AAACAGGCCC

# ACCGCTAGAT

# AGCCCCATCC

# CCGGTATACC

# AGCGGACCCC

# AACAACCCCC

#

# and prints an output file in fasta format like this:

#

# >1

# ACCTTACGCC

# >2

# AGTAACGTAG

# >3

# TTAGTATATA

# >4

# ACCTACGATA

# >5

# AAACAGGCCC

# >6

# ACCGCTAGAT

# >7

# AGCCCCATCC

# >8

# CCGGTATACC

# >9

# AGCGGACCCC

# >10

# AACAACCCCC

#

################################################################################

# Author: Benjamin Tovar

################################################################################

use warnings;

use strict;

my $USAGE = "

USAGE:

seq2fas.pl

EXAMPLE:

seq2fas.pl randomDNAsequences.dna random_seqs 60 randomSeqs.fas

";

my $user_in = shift or die $USAGE;

my $fasta_header = shift or die $USAGE;

my $width = shift or die $USAGE;

my $output_file = shift or die $USAGE;

system("pr -n:3 -t -T $user_in | sed 's/^[ ]*/>$fasta_header-/' | tr \":\" \"\n\" | fold -w $width > $output_file");

exit;

Author: Benjamin Tovar